Generator Functions in Python : For Data Nerds!

A powerful tool in dealing with data and an even cooler function to learn :)

Today, we are going to learn about one of Python's most intriguing features—generator functions. These are obviously not your normal functions; they're a handy tool for dealing with data more efficiently, especially when you have extremely large datasets that could give traditional functions a run for their memory.

What are Generator functions?

Any function that uses yield instead of return is a generator function. Simple right? but what does yield do? The yield keyword here produces a sequence of values over time, instead of returning a single value. For example, instead of returning a list of items or a whole dataset, it produces a sequence of items from a list or rows from the dataset at a time.

This allows generator functions to produce values on the fly and pauses their state between outputs, making them memory-efficient and performance-friendly.

This has nothing to do with the trending and cool Generative AI that's been capturing everyone's attention; we are talking about functions that help us streamline our data processing tasks with elegance and efficiency.

How does it do that?

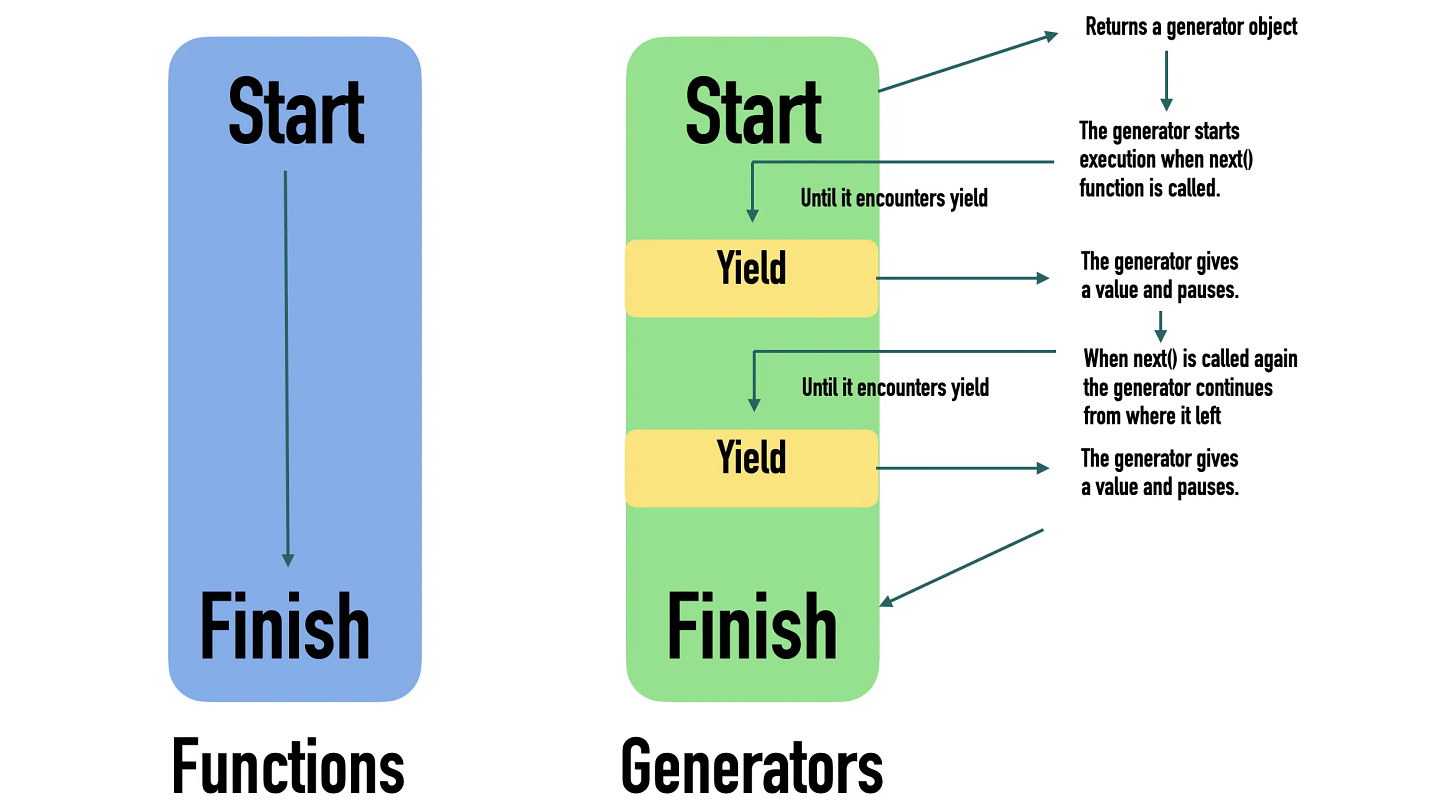

A normal function runs through all the lines of codes within it and returns a value. The generator function, however, returns an iterator, or in this case, a generator object. This produces only one value at a time so no other values will be stored. When the next() function is used on the object, the next value is produced.

Basically, we can loop through something without storing everyone all at once with the help of generators. You can learn more in detail about this here.

Let’s try to understand it from an example:

def infinite_counter():

count = 0

while True:

yield count

count += 1

# Using the generator

for number in infinite_counter():

print(number)

if number >= 10: # Stop at 10 to keep things sane!

breakThis is a simple infinite counter. Here's what's happening:

infinite_counterdefines a generator function that starts counting from 0.Inside an infinite loop, it

yieldsthe current count, then increments it.In the

forloop, the function is called so it produces numbers from 0 upwards.The loop prints each number, and we've added a break condition to stop the loop after it prints 10, preventing an actual infinite loop.

This example shows the power of generators to handle potentially infinite sequences in a memory-efficient way, yielding one item at a time!

Generative Expressions:

Generator functions are just one way to create generator objects. Another way is Generator Expressions. They are just like list comprehensions but for generators; you can convert a list comprehension into a generator expression by replacing the square brackets [] with parentheses ().

squares = (x**2 for x in range(10))This generator expression creates a sequence of squared numbers, showcasing the elegance and simplicity of using generative functions for on-the-fly data processing.

Generator Functions in Data Processing

Generative functions are the unsung heroes of memory management. Their ability to yield data incrementally means they can process information piece by piece, rather than loading everything into memory at once. This approach is not just about being resourceful; it's a necessity when dealing with datasets that can go across gigabytes or even terabytes.

Generative functions are incredibly versatile, fitting a wide range of scenarios beyond the basics:

Real-time data streams: Perfect for processing live data feeds, where data is continuous and potentially infinite.

Large files: Useful for reading and processing data without the need to load everything into memory simultaneously.

Data transformation pipelines: Implement stages of data transformation where each function passes its output to the next, efficiently handling data at each step.

Let’s run through some examples because the author is not happy with the length of this article so far.

Example 1: Processing large files

Let’s say you want to filter out specific entries from a file based on certain criteria, such as error messages in a log file:

def filter_errors(log_file):

with open(log_file, 'r') as file:

for line in file:

if "ERROR" in line:

yield line.strip()This function goes through each line of the log, yielding only those that contain error messages, showing us how generative functions can be used for real-time data filtering.

Example 2: Data Loading and Preprocessing

Generators are particularly useful in machine learning for data loading and preprocessing. Libraries like TensorFlow and PyTorch support data loaders that can be used to stream data from disks in batches using generator functions.

TensorFlow extensively uses the concept of generators through its tf.data.Dataset API, which allows for efficient data loading, preprocessing, and augmentation on the fly during model training.

The from_generator method allows you to create a Dataset from a Python generator. Here, TensorFlow uses the generator function indirectly to stream data:

import tensorflow as tf

dataset = tf.data.Dataset.from_generator(

generator=load_data,

output_types=(tf.float32, tf.float32),

args=(arg1, arg2,)

)

dataset = dataset.batch(32) # Batching data for trainingExample 3: Principles in Pandas

Pandas offers functionality that aligns with the principles of generators, useful when dealing with large datasets that might not fit into memory.

For row-wise iteration, iterrows and itertuples can be used, though it's important to note that these methods may not always be the most efficient way to iterate over a data frame.

import pandas as pd

df = pd.DataFrame({'a': range(10), 'b': range(10, 20)})

# iterrows example

for index, row in df.iterrows():

print(row['a'], row['b'])

# itertuples example

for row in df.itertuples(index=False):

print(row.a, row.b)Pro-Tip: Pandas' read_csv function allows processing large CSV files in manageable chunks, a method particularly beneficial for large datasets. When you use the chunksize parameter, the function will return an iterator object.

for chunk in pd.read_csv('large_file.csv', chunksize=100000):

# Process each chunk here

process(chunk)Example 4: Web Crawling with Generators

Scrapy, an asynchronous web scraping framework, uses generators and coroutines to handle web requests and responses efficiently. Here's a simplified example of a Scrapy spider that uses generators to crawl web pages:

import scrapy

class ErrorLogSpider(scrapy.Spider):

name = "error_log_spider"

start_urls = ['http://example.com/logs']

def parse(self, response):

# Extract log page URLs

log_urls = response.css('a::attr(href)').getall()

for url in log_urls:

yield response.follow(url, self.parse_log)

def parse_log(self, response):

# Extract error messages

for error_msg in response.css('.error::text').getall():

yield {'error': error_msg}We start from a main page, follow links to log pages, and extract error messages, all while using generators to facilitate efficient data extraction and processing in web crawling tasks.

Example 5: Related concepts in other libraries

This concept of deferring computation and efficiently managing resources is a common thread that ties together various Python libraries. Libraries like Numpy leverage iterators for creating arrays from iterable sequences, optimizing memory usage in data manipulation. Similarly, PySpark employs lazy evaluation to efficiently process big data across distributed systems, executing transformations only when an action requires the result, thereby optimizing computation and resource utilization.

Understanding and leveraging these principles allows data professionals to handle larger datasets, speed up data processing, and write more efficient and scalable Python code.

Hopefully, these examples illustrate the power of generators, iterators, and related concepts, highlighting their importance in efficient data processing and analysis in various contexts. Whether you're processing streams of real-time data or chipping away at massive datasets, embracing generative functions can elevate your data handling to new heights.

That is it from me! Have you used similar techniques before, or do you see new opportunities to apply them in your work? I hope this exploration was helpful in some way!

If you found value in this article, please share it with someone who might also benefit from it. Your support helps spread knowledge and inspires more content like this. Let's keep the conversation going—share your thoughts and experiences below!