The Sustainability Impacts of ChatGPT: A Comprehensive Analysis

Explore how Large Language Models (LLMs) influence the environment and how your actions can drive sustainable AI development.

Large Language Models (LLMs) like GPT (Generative Pre-trained Transformer) and LLaMA (Large Language Model Meta AI) have revolutionized the way we interact with data and machines, providing deep insights and enhancing human-machine interactions. As transformative as LLMs are for tasks like translation, content generation, and customer support, they come with substantial environmental costs primarily due to their high energy demands.

This article provides an essential technical backdrop, how LLMs affect our environment, the ongoing efforts to mitigate these effects, and how policies and personal actions can contribute to more sustainable AI practices.

What are Large Language Models?

ChatGPT, Claude, Gemini, and yes BERT (Bidirectional Encoder Representations from Transformers) are all Large Language Models but what are they and why are they so energy extensive?

Large Language Models (LLMs) are a type of artificial intelligence system that is trained on vast amounts of text data, allowing them to generate human-like responses, understand and process natural language, and perform a wide range of language-related tasks. It is like looking for patterns in the texts to figure out what to say back to you, and these texts are huge amounts of articles/books/posts/etc., also called training data.

Essentially, LLMs work by using neural networks to identify patterns and relationships in the training data, which can then be used to generate new text, answer questions, translate between languages, and more. These neural networks have layers of algorithms, each designed to recognize different elements of human language, from simple grammar to complex idioms and mainly context.

The training process involves repeatedly adjusting these layers to minimize errors in output, requiring multiple iterations across potentially billions of parameters. For example, GPT-3 has about 175 billion parameters. It is trained on about 45TB of text data from different datasets. This, right now, is a medium to small LLM. The more ‘better’ a model is the more complex and resource-intensive it gets.

This computation is not only data-intensive (remember the huge amounts of training data?) but also requires a lot of electrical power, typically executed on specialized hardware like GPUs (Graphics Processing Units) or TPUs (Tensor Processing Units). You can already see the storage, training/processing, and operational costs that it can incur.

Understanding the Environmental Impacts of LLMs

Each phase of the LLMs has its own footprint:

Training Impacts:

The training process requires considerable computational resources, typically involving multiple high-powered GPUs or TPUs that run continuously for weeks or even months. This consumes large amounts of electricity, contributing to the carbon footprint of LLMs. All these AI companies boast about how amazing and powerful their new model is, and how much information it can process but they rarely discuss the computational and environmental cost of said models.

For example, students from the University of Copenhagen developed a tool to predict the carbon footprint of algorithms and found that one training session with GPT-3 uses the same amount of energy that is needed by 126 homes in Denmark annually.

Another famous study by researchers at the University of Massachusetts, Amherst, performed an analysis of the carbon footprint of transformer models.

They found that a simple transformer with around 213 million parameters emits almost 5 times the lifetime emissions of the average American car or about 315 round trip flights from New York to San Francisco.

Just to put things in perspective, let’s see what an actual model’s carbon footprint is like. A simple 213-parameter model produces 626,155 lbs of CO2. Claude 3 is rumored to have 500 billion parameters. Let’s assume a linear scaling (for our simple brain to comprehend), which would be an increase of 2348x.

So 626,155 x 2348 is a whopping 1,469,456,540 or 1.5 Billion lbs of CO2 for a model like Claude 3 that we are using nowadays.

This is of course due to the energy-intensive nature of the training process, which involves running the model through billions of computations. But why companies are shy about revealing such numbers?

Storage and Operational Impacts:

The data centers that power LLMs are also a major source of environmental impact. These facilities require large amounts of energy for cooling, ventilation, and other operational needs. They also generate e-waste from the constant upgrading and replacement of hardware.

A recent study at the University of California, Riverside, revealed the significant water footprint of LLMs. Microsoft used approximately 700,000 liters of freshwater during GPT-3’s training in its data centers which is equal to how much water is needed to make 320 Tesla vehicles.

I know it says during the training process but the model also uses a lot of water in the inference process (when you are using it). For a brief exchange involving 20-50 queries, the water usage is comparable to a 500 ml bottle. Given its billions of users, the cumulative water footprint for processing these interactions is quite significant. Even if we take 1 billion users that is 500 million liters of water; it can fill up around 200 Olympic-sized swimming pools.

Moreover, the storage and hosting of LLMs, which can be terabytes in size, requires dedicated server infrastructure, further adding to the environmental footprint.

In response to Bloomberg asking OpenAI about the sustainability concern, they had this to say:

‘OpenAI runs on Azure, and we work closely with Microsoft’s team to improve efficiency and our footprint to run large language models.‘

Also found these discussions on the OpenAI Community

Hardware and Other Impacts:

LLMs also require significant hardware resources, such as high-performance GPUs, storage, and memory. I was reading this article when I read the author’s update that they had assumed ChatGPT runs on 16 GPUs, but in fact, it runs on more than 29,000 GPUs. The manufacturing, transportation, and eventual disposal of these hardware can also have environmental impacts.

This raises concerns about environmental justice, as the resource-intensive nature of these models may disproportionately affect marginalized communities and developing regions that have less access to clean energy and sustainable infrastructure.

How to be more sustainable with AI?

As the concerns around the environmental impacts of LLMs have grown, there have been various efforts and advancements to make these models more sustainable. But I can’t lie, I do not feel like these efforts are enough. I am not convinced that the speed of these advancements can keep up with the exponential growth of LLMs and AI.

Some of the key areas that we need to start or continue focusing on:

1. What can companies do?

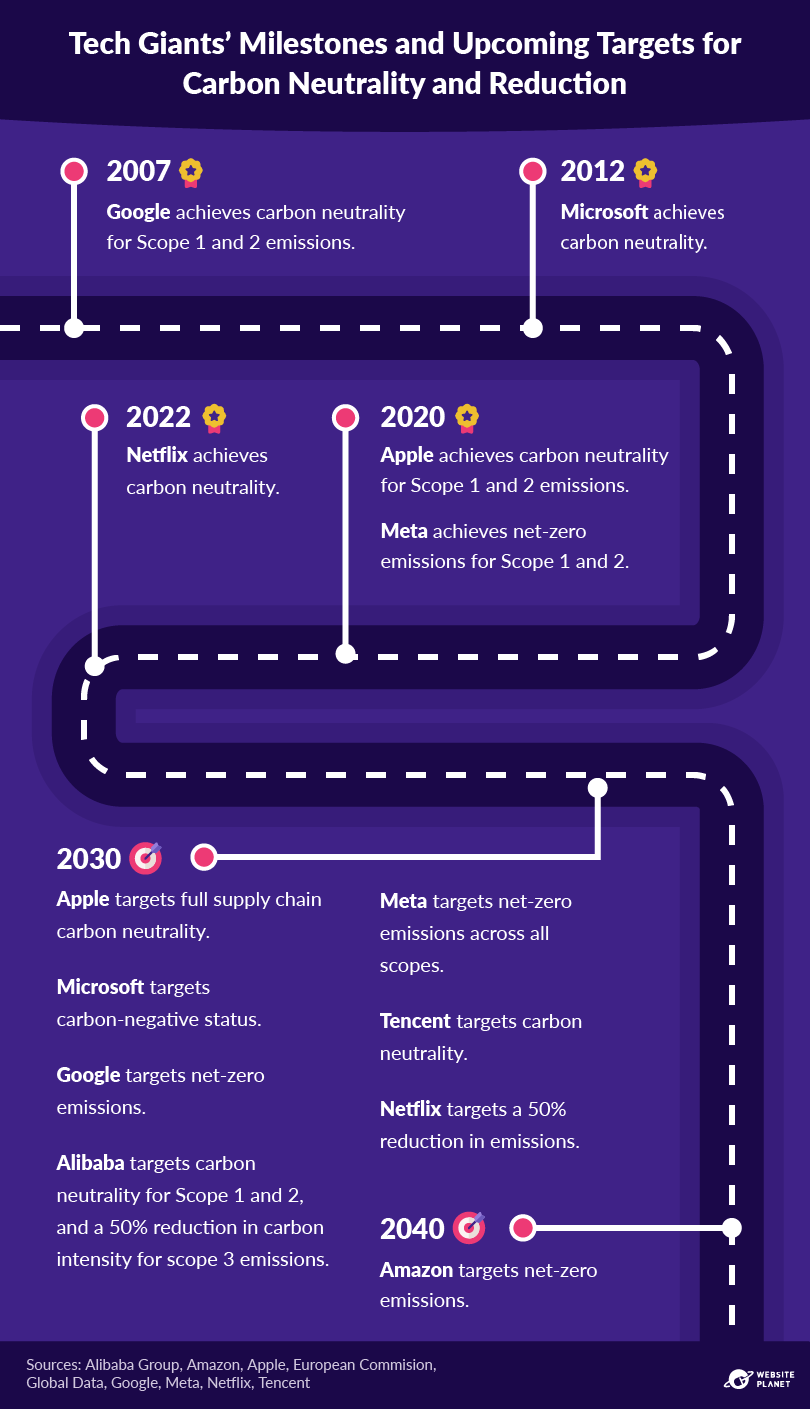

Companies are investing heavily to reduce the energy consumption of AI tools and models. Some are exploring using carbon offsets to counterbalance the emissions generated by their LLMs. Microsoft, Google, Apple, and Meta all have pledged to be carbon-neutral and net-zero. Google developed tensor processing units (TPUs) that are more energy-efficient than traditional GPUs.

But there is still a need for awareness of how much impact AI has on the Earth publicly. Companies need to be transparent with footprints and work with the public to make Earth more sustainable. Not only that, they need to implement renewable and recycling programs.

This is not just about being green in the public eye; it’s about pushing the sustainability agenda to the forefront and setting industry standards.

2. What can developers do?

There is already an emphasis on researching and developing more efficient models. Techniques such as transfer learning, pruning, quantization, knowledge distillation, etc. are being employed to make models more efficient without sacrificing performance.

Developers should prioritize building and contributing to open-source projects focused on sustainable AI practices. More training in eco-conscious programming can also be embedded in developer education.

3. What can policymakers do?

Policymaking plays a crucial role in guiding the development and implementation of AI technologies sustainably. Policies that incentivize energy-efficient AI, set emissions targets, and encourage the use of renewable energy can help drive companies to adopt more sustainable practices.

The EU’s Green Deal includes specific provisions for digital sector sustainability, aiming to significantly reduce its carbon and electronic waste footprint. The AI Act is the first-ever legal framework on AI, which addresses the risks of AI. These are a good start but we need more, especially in the US. We need to enact policies that require tech companies to report and reduce their carbon footprints. Transparency in energy consumption should be mandatory, not optional.

4. What can YOU do?

Of course, as individuals, raising awareness and supporting green AI initiatives is crucial, but it starts with being well-informed. Understanding the environmental implications of AI use and sharing this knowledge can catalyze collective action toward sustainable practices. Being vocal and using our influence matters significantly.

Additionally, on the technical side, using concise prompts and selecting models that are efficient in processing can reduce computational demands. By streamlining the complexity and length of prompts and reducing unnecessary interactions, we can significantly cut down on the computational resources needed. This not only conserves energy but also aligns with more sustainable AI usage practices.

Conclusion

While LLMs offer unprecedented capabilities, their environmental impact cannot be overlooked. It’s clear that bold steps are needed from all stakeholders—companies, developers, policymakers, and users alike. The path to sustainable AI is complex and challenging, but with concerted effort and innovation, it’s possible to harness the benefits of AI without compromising our planet.

That is it from me! I hope this exploration was helpful in some way! What are your thoughts on sustainable AI? What are some trends you noticed?

If you found value in this article, please share it with someone who might also benefit from it. Your support helps spread knowledge and inspires more content like this. Let's keep the conversation going—share your thoughts and experiences below!